Extreme Multi-label classification - FastXML

1. Introduction

Previously we explored multi-label algorithms using the Scikit-multilearn

link to ranking, search engine

2. Datasets

Datasets are

3. Metrics - Normalized Discounted Cumulative Gain (nDCG@k)

The notion of what constitutes a good prediction changes when we go from a few labels to millions of labels. The number of relevant/positive labels for any data point is significantly smaller than the set of possible labels. As such, typical multi-label metrics that we have used such as F1-score and Hamming loss would give equal weight to positive and negative labels. It is more important for us to correctly predict the positive labels vs the negative labels.

To favor positive labels we limit our metric to the top k results. For example, precision at only focuses on how many of the top k positive labels are predicted. It is thus sensitive to the relevance of the prediction. However it does not take the rank of the results into account.

Precision@k = (# of predicted items @k that are relevant) /

(# of predicted items @k)

If for example, predicting only 1 relevant item will return a precision@5 of 20%, regardless if it is the 1st or 5th item.

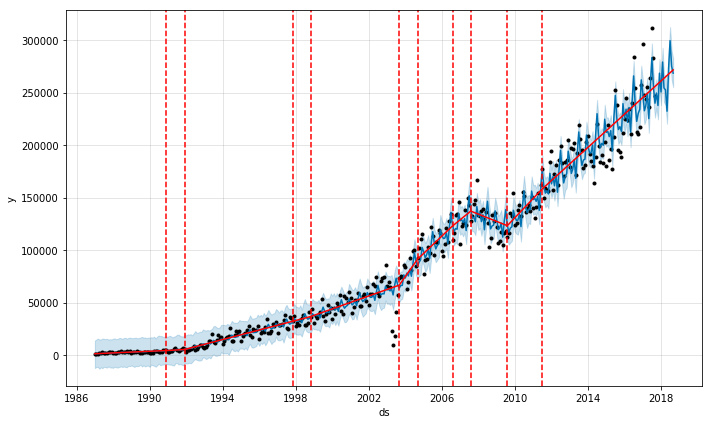

To incorporate a measure of the ranking quality, we need a rank sensitive loss function. A popular metric used for information retrieval is the normalized discounted cumulative gain. To items are required to construct the nDCG; a relevance score and a ranking function. The relevance score or gain assigns a real number to the recommendations; the higher the number, the more relevant. In our case we simplify by having binary relevance in . The ranking function is essentially a permutation, reordering the integers in .

The ranking will return an ordering for our labels. To get the cumulative gain at k, we sum the respective gains for the first ordered labels. Discounting is when we divide the respective terms in the gain by an increasing function, usually logarithmic in order to favor the higher ranked gains, this gives us the discounted cumulative gain at k or DCG@k. Finally, in order to compare different label vectors with different numbers of positive labels, we normalize the DCG@k for it to lie between 0 and 1.

In formal mathematical terms, our multi-label case we have the data points where are dimensional real feature vectors and are the dimensional binary label vectors ( if the label is relevant for point ). Let r be a permutation of and . DCG@k for r and is defined as:

Now to get it between 0 and 1 we divide by the sum of up to k or the number of positive labels in , whichever is smaller:

where :

| Rank | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Relevance | 1 | 0 | 0 | 1 | 0 |

Relevance of the top 5 predicted labels

4. FastXML

An efficient methode that is able to scale to millions of labels is the FastXML algorithm by Yashoteja Prabhu and Manik Varma as described in their paper.

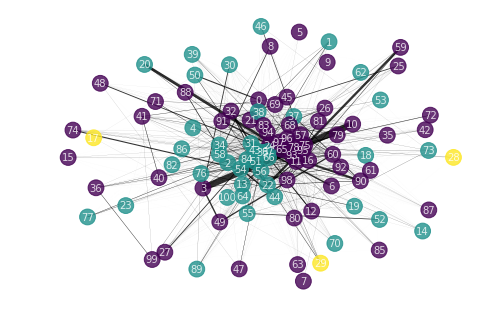

FastXML is a tree based classifier algorithm that partitions the feature space instead of the label space. The intuition is that there are only a few labels associated with a region of the feature space. At any region of the feature space, the set of active labels are the union of the labels of all the region’s training points. At each node, the parent feature space is partitioned into a left and right child space. In decision trees, Gini impurity, Information gain or clustering error is typically used to split each node.

As these measures are not suited for extreme multi-label applications, the authors have proposed to use nDCG instead as it is a ranking loss function. This will recommend better labels as relevant positive labels with the highest rank are returned. nDCG is optimized across all labels at the current node;

Refefer implemented the FastXML algorithm in python/cython as share in this github repository